New Kaggle contest! Estimating auction price for second hand construction equipment. Didn’t know bulldozer auctions were such a big thing. We had lots of historical data (400000 auctions, starting from 1989) and we had to estimate prices a few months “into the future” (2012).

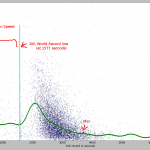

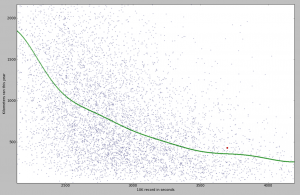

Getting to know the data

Data cleaning is a very important step when analyzing most datasets, including this one. There were a lot of noisy values. For example, a lot of the equipment appeared to have been made in the year 1000. I’m no expert in bulldozers, but the guys who are (providing us the data) told us that’s noise. So, to help the competitors, they provided an index with info regarding each bulldozer in the dataset. After “correcting” the train/test data using the index, I got worse results for my model. In the end, I used the index only for filling in missing values. Other competitors used the original data along with the index data – that might have worked a little better.

Building the model

My first model was composed out of two Random Forest Regressors and two Gradient Boosting Regressors (python using sklearn), averaged using equal weights. The parameters were set by hand, after very little testing.

A first idea of improving came after noticing a lot of the features were relative to the bulldozer category. There were six categories: ‘Motorgrader’, ‘Track Type Tractor, Dozer’, ‘Hydraulic Excavator, Track’, ‘Wheel Loader’, ‘Skid Steer Loader’ and ‘Backhoe Loader’. So I segmented the data by category and trained instances of the first model (2 RFR + 2 GBR) on each subset. This generated a fair improvement.

I continued on this line by segmenting the data even more, based on subcategory. There were around 70 subcategories. Again, segmenting and training 70 models generated another improvement. By this time, the first model (trained on the whole data), wasn’t contributing at all compared to the other two, so I removed it from the equation. With this setup, I was on 10th place on the public leaderboard one week before the end of the competition. The hosts released the leaderboard dataset, so we could also train on it, and froze the leaderboard (no use for it when you can train on the leaderboard data).

Putting the model on steroids

Usually, when you want to find the best parameters for a model, you do grid search. For my “2 RFR + 2 GBR” model, I just tested a few parameters by hand. Time to put the Core i7 to work! But instead of grid seach, which tries all combinations and keeps the best, I tried all combinations and kept the best 20-30. Afterwards, I combined them using a linear model (in this case – Ridge Regression).

I also tried other models (besides RFR and GBR) to add to the cocktail. While nothing even approached the performance GBR was getting, some managed to improve the overall score. I kept NuSVR and Lasso, also trained on (sub)categories.

Outcome and final thoughts

Based on my model’s improvement over the final week (the one with the frozen leaderboard) and my estimation over my competitors’ improvements, I expected a final ranking of 5th – 7th. Unfortunately, I came 16th. I’ve made two errors in my process:

The first one was the improper use of the auction year when training. This generated a bias in the model. Usually, I was training on auctions that took place until 2010 and trained on auctions from 2011. Nothing wrong here. Then I also trained on actions until 2011 and tested on the first part of 2012 (public leaderboard data). Nothing wrong here. For the final model, I trained on the auctions until the first part of 2012 and tested on the second part of 2012. BOOM!

Prices fluctuate a lot during the year. They are usually higher during spring and lower during fall. When I was training on data from a whole year, there was no bias for that year, since it was a value the model hasn’t seen in training. But when I tested on fall data from 2012 on a model trained using spring data from that same year, the estimated prices were a little higher.

The second error was with averaging the 20-30 small models trained on (sub)categories. In a previous contest I used neural networks for this, but the final score fluctuated too much for my taste. I also tested genetic algorithms, but I thought the scores were not very good. Ridge regression gave significantly better results. There was one small problem though: it assigned positive as well as negative weights. Usually, ensembling weights are positive and sum to one. So price estimates are averages from a lot of predictions and they generalize well to unseen cases. With negative weights, estimates are no longer averages and generalizing gets a little unpredictable.

For posterity, the code is here. I recommend checking out the winning approaches on the competition’s forum.