If you’re reading this, you probably know I’m a big fan of Kaggle. Unfortunately, getting good results in their contests requires a significant time investment, and I’ve lacked free time lately. But when Catalin Tiseanu‘s proposition to join the Deep Learning HackerEarth Challenge overlapped with an easier period at work, I said sure. The contest was only one month long and it was already half way in, so it was a small time commitment. I also brought my colleague Alexandru Hodorogea in as well.

First off, a few words about HackerEarth’s platform. OMG! You realize just how finessed Kaggle is in comparison. Just to read the challenge statement, you need to have an account and set up a team. That explains why they have over 4000 competitors, yet only ~200 that actually submitted something. If two people have both read the statement and want to form a team, well.. they can’t. Team merging is allowed, just not possible given the primitive interface. One of them will have to create another account. They have no forum, so it’s hard to have a conversation, share something, discuss ideas. The organizers are very slow (publishing the final leaderboard took about 10 days, posting the winning solutions still hasn’t happened and it’s been one month since it ended).

On top of that, the challenge’s dataset is freely available online. Of course, the rules forbid using any external data. The organizers mentioned they’ll manually review the code for the winning models. Still, most people are not in this for the money, so having somebody get a perfect score was inevitable. While these are easy to prune, you can still use the dataset in many less obvious ways, making the leaderboard meaningless.

A few words about the data: the training set consists of 3200 images in 25 classes. Another 1700 are in the test set. The images are conveniently small (256×256), usually of good quality, but sometimes blurry or not very clear. I think a human annotator would struggle a bit figuring out what type of product is featured in each photo.

Even though they forbid external data, the rules allow using pretrained models, and that’s how we started. We used Keras because:

– it’s very easy to use

– it comes packed with high quality pretrained models; I still can’t believe how easy they are to use; you can have a state-of-the-art model up and running in 10 lines of code

– it’s used in Jeremy Howard’s MOOC (although he mentioned he’s going to migrate the code to Pytorch)

You’ve probably guessed this competition requires some serious processing power. On my GTX 760 GPU, getting one model to something usable (~90%) took about 50 hours. Fortunately, Catalin had access to a Tesla K80, which was about 5 times faster. Not only that, but it had 2 cores, so we could work in parallel. That really helped us, especially once we got to the ensembling part.

Before going into what we did and why we did it, here is the code. Going through it very fast, we have:

– a script for preparing the data (preprocess_data.py dooh)

– a collection of useful functions (util.py)

– several scripts for training some networks (inc_agg_aug_full.py, inc_agg_aug_full_part_2.py and hodor_resnet.py)

– the ensembling script (ensemble.py)

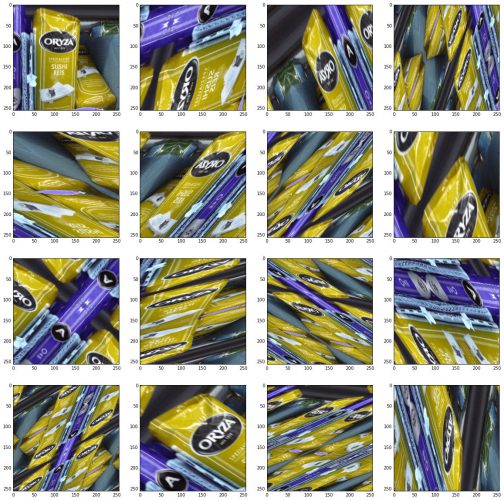

Finetuning a pretrained Keras model would get you to about 80% on the leaderboard. Let’s see how we got to 95%. First thing – do data augmentation. This is even more important here since the dataset is very small (3200 images, with some classes having less than 100 images). We used imgaug and cranked up the knobs as long as our networks were able to learn the data. On the most aggressive setup, an input image (top left corner) would get distorted to what you see below. Surprisingly, the networks were able to generalize to the test data (despite some overfitting).

Aggressive augmentation got us to around 90-91% for a single network. We managed to push this by an extra ~1% by doing test-time augmentation. Instead of passing each test image once, we generated 10 augmentations and averaged the scores.

Finally, ensembling brought the final ~2% on the table. We didn’t have time to generate a large variety of models, so we did “poor man’s ensembling”: we added a series of checkpoints in the final stages of training a network, so we’d have not one, but several slightly different networks after training finished.

Something we put a lot of effort in, but didn’t work, was pseudolabeling. The idea is simple: train network A on the train data and evaluate on the test data. Then use both the train and test data (with the predicted labels) to train network B. Finally, use network B to generate the final predictions on the test data. We tried several approaches here, but neither worked and we don’t have an understanding of why it works on some problems and not on others.

Overall, it was a good learning opportunity for us: finetuning pretrained CNN models, working as a team across different time zones, managing GPU resources given a tight deadline and seeing how far we can push a Jupyter Notebook server until we crash it (not very far apparently).

PS: Be sure to check out Catalin’s notes here.

Thank you for sharing your approach.

It is interesting that just by progressively increasing the degree of augmentation you were able to increase accuracy so dramatically.

I have stuck with just 81% accuracy on a single model and underfitting if the level of augmentation was increased.

Hi Andrei

Thanks for posting posting your approach. I have few questions

In all the scripts i.e inc_agg_aug_full.py, inc_agg_aug_full_part_2.py and hodor_resnet.py

only inc_agg_aug_full_part_2.py is using validation data which again is commented. Where as inc_agg_aug_full.py and hodor_resnet.py doesn’t use validation data. Why is this. I guess, while training, I must split the data into train and val, and pass in model.fit_generator().

Is this correct ?

When working locally, you split the contest’s training data in two: local training and local validation. After you’ve finished developing your model, you retrain everything on all of the contest’s training data. At this point, you no longer have any data to validate locally, that’s why the validation code is commented or missing.

Hi again

I could not find these

(‘inc_agg_aug_full_gpu1_cp4’, 1, 0),

(‘inc_agg_aug_full_gpu1_cp4’, 1, 1),

(‘inc_agg_aug_full_gpu1_cp5’, 1, 0),

(‘inc_agg_aug_full_gpu1_cp5’, 1, 1), these models that are referenced in ensemble script

https://github.com/andreiolariu/deelearning-hackerearth/blob/master/ensemble.py

I did not upload any models. Each model is several hundred megabytes in size.