Yesterday I took part in the second uberVU hackaton. Theme of the day was visualizing data. I joined the team trying to improve the tagcloud (my teammates were Alex Suciu and Dan Filimon).

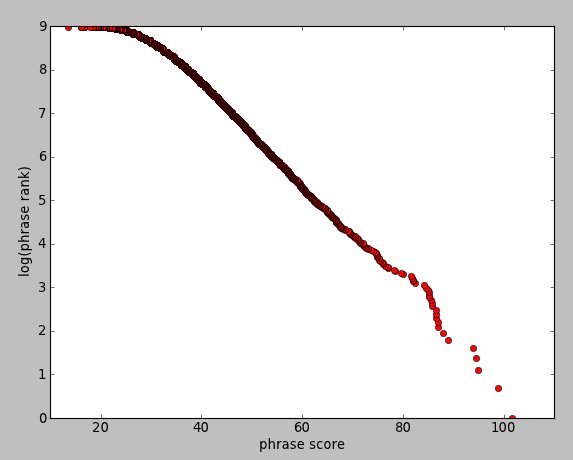

We came up with the idea of highlighting relations in a tagcloud. More exactly, we presumed a normal phrase is of the fom “<noun – who?> <verb – what?> <noun – to whom?>” (and this can be easily expanded to include adjectives, adverbs and pronouns). This is, pretty much, the basis of natural language parsing. Since a parser is very slow and inadequate for huge volumes of data (which was the case here), we thought of simplifying it. We would have a smaller accuracy than an advanced parser, but the results over tens of thousands of tweets (that’s what we were working on) would be (eventually, after adding some more work hours) similar.

The code is available here. Unfortunately, since there weren’t enough frontend guys around, there’s no way of visualizing the results. Still, we can read and comment them. We tracked tweets containing the “iphone” keyword (about 5000 in total) and we noticed an interesting fact among our results – people express a lot of possession over iPhones. The second, third and sixth most frequent (verb, noun) relations were “have iphone”, “got iphone” and “want iphone”. Also, a few places behind we found “need iphone” and “buy iphone”.

A future interesting project would be to track the evolution of these pairs for a new product and see how they evolve, from the rumours, first announcement, release and up to a few months after, when pretty much everybody has it.